16 min read

Integrating Site-Specific MPI with an OpenFOAM Official Apptainer Image on Slurm-Managed HPC Environments

In our previous blog post, OpenFOAM Supports Apptainer v1.2.0 Template Definition File Feature, I showed you how to use OpenFOAM (R) (OpenCFD Ltd.) with Apptainer; and in A New Approach to MPI in Apptainer, I demonstrated how to build and run a a portable MPI container with Apptainer's “Fully Containerized Model”solution.

In this article, I will focus on how to efficiently build fully containerized portable MPI containers with Apptainer. “Swapping approach” is introduced here with the example of how to integrate site-specific MPI to the OpenFOAM (R) (OpenCFD Ltd.) pre-built image. This approach achieves the integration of site-specific MPI without needing to rebuild the application.

Since OpenFOAM v2306, OpenFOAM distributes the pre-built official Apptainer image on Docker Hub. The first example uses this pre-built image and illustrates how a swapping approach can efficiently build fully a containerized MPI application container without rebuilding OpenFOAM. (The second example provides a peek into what daily life is like for HPC-ers 😁.) Finally, to cover the broader set of use cases, we will show you how the “swapping approach” works.

OpenFOAM Official Pre-built Image on Docker Hub

Let’s start with running the OpenFOAM official pre-built image!

apptainer run oras://docker.io/opencfd/openfoam-dev:2306-apptainer

Note that it takes a bit of time to download the image (about 311MB). The latest version of Apptainer v.1.2.2 does not support the “progress bar” feature, so there is no indication of downloading progress.

INFO: Downloading oras image

We are working on implementing the progress bar feature for push and pull images with the ORAS protocol. The upcoming feature will be look like the following. (While the OpenFOAM pre-built image is downloading, please enjoy the following animation 🙂 ):

After successfully downloading the image, you will see the following outputs:

INFO: underlay of /etc/localtime required more than 50 (86) bind mounts

---------------------------------------------------------------------------

========= |

\\ / F ield | OpenFOAM in a container [from OpenCFD Ltd.]

\\ / O peration |

\\ / A nd | www.openfoam.com

\\/ M anipulation |

---------------------------------------------------------------------------

Release notes: https://www.openfoam.com/news/main-news/openfoam-v2306

Documentation: https://www.openfoam.com/documentation/

Issue Tracker: https://develop.openfoam.com/Development/openfoam/issues/

Local Help: more /openfoam/README

---------------------------------------------------------------------------

System : Ubuntu 22.04.2 LTS

OpenFOAM : /usr/lib/openfoam/openfoam2306

Build : _fbf00d6b-20230626 OPENFOAM=2306 patch=0

Note

Different OpenFOAM components and modules may be present (or missing)

on any particular container installation.

Eg, source code, tutorials, in-situ visualization, paraview plugins,

external linear-solver interfaces etc.

---------------------------------------------------------------------------

apptainer-openfoam2306:~/

ysenda$

Now we have confirmed that we can use OpenFOAM v2306 just by pulling the image from Docker Hub without needing to build anything. In the next step, we will show you how to integrate site-specific MPI to this OpenFOAM pre-built image. We accomplish this by using the “Swapping approach” to create a “fully containerized” OpenFOAM/site-specific MPI container.

Fully Containerized MPI Container by Swapping Approach

In this section, we will demonstrate how to build the “fully containerized” OpenFOAM/site-specific MPI Apptainer image for a Slurm-managed HPC cluster environment by using the “swapping approach.”

SchedMD LLC, The GNU General Public License v3.0 - GNU Project - Free Software Foundation

First, check what protocols Slurm supports on your environment with the following command:

srun --mpi=list

PMI-2 is supported on our test environment.

MPI plugin types are...

cray_shasta

none

pmi2

Run Tutorial with OpenFOAM Official Pre-built Image via Slurm

OpenFOAM v2306 image uses OpenMPI v4.1.2 (see mpirun --versionoutput inside container) and PMIx (see orte-info output inside container). In our test environment, Slurm only supports PMI-2. If we run the tutorial with the official OpenFOAM image via Slurm without swapping to the appropriate OpenMPI, the MPI initialization will fail, since the OpenFOAM image does not support Slurm PMI-2.

Pull the official image and store it as a single file called openfoam-dev_2306-apptainer.sif

cd ~/

apptainer pull oras://docker.io/opencfd/openfoam-dev:2306-apptainer

Move the downloaded image to one of your PATH locations (ex. ~/bin )

mv openfoam-dev_2306-apptainer.sif ~/bin

Clone the OpenFOAM v2306 branch to get the corresponding tutorials

git clone -b OpenFOAM-v2306 https://develop.openfoam.com/Development/openfoam.git

Change the directory to tutorial case

cd openfoam/tutorials/incompressible/icoFoam/cavity/cavity

Run the tutorial via Slurm. (Please change the partition to match your environment. We use opa here.)

openfoam-dev_2306-apptainer.sif blockMesh

openfoam-dev_2306-apptainer.sif decomposePar

srun --mpi=pmi2 --ntasks=9 --tasks-per-node=9 \

--partition=opa \

openfoam-dev_2306-apptainer.sif icoFoam -parallel

We got the expected error messages; here's the gist of the error message.

--------------------------------------------------------------------------

The application appears to have been direct launched using "srun",

but OMPI was not built with SLURM's PMI support and therefore cannot

execute. There are several options for building PMI support under

SLURM, depending upon the SLURM version you are using:

version 16.05 or later: you can use SLURM's PMIx support. This

requires that you configure and build SLURM --with-pmix.

Versions earlier than 16.05: you must use either SLURM's PMI-1 or

PMI-2 support. SLURM builds PMI-1 by default, or you can manually

install PMI-2. You must then build Open MPI using --with-pmi pointing

to the SLURM PMI library location.

Please configure as appropriate and try again.

--------------------------------------------------------------------------

*** An error occurred in MPI_Init_thread

*** on a NULL communicator

*** MPI_ERRORS_ARE_FATAL (processes in this communicator will now abort,

*** and potentially your MPI job)

[c5:804957] Local abort before MPI_INIT completed completed successfully, but am not able to aggregate error messages, and not able to guarantee that all other processes were killed!

[c5:804918] OPAL ERROR: Unreachable in file ext3x_client.c at line 112

The error message suggested that we build OpenMPI against the Slurm PMI-2 library. The other option is to rebuild Slurm with PMIx. In the next step, we will build OpenMPI against Slurm PMI-2 and integrate that MPI to the OpenFOAM pre-built container via the swapping approach.

Swapping Approach: Solve The Problem in an Apptainer-wise Way

The Slurm on our test environment can speak PMI-2. To run the tutorial successfully via Slurm, we need to build OpenMPI against the Slurm PMI-2 library; we call this “Site-Specific MPI” here.

After building the site-specific MPI, usually we need to build OpenFOAM against the site-specific MPI. However, it takes 1-2 hours on a high-end machine, and if it is possible, we want to avoid rebuilding OpenFOAM site-by-site to ensure portability as much as we can.

Luckily, OpenFOAM has a configuration file that allows us to change the MPI library path. We use this feature here for swapping the MPI library to the site-specific one, and avoid rebuilding OpenFOAM at the same time.

If the major version of OpenMPI changes, simply swapping the MPI library path probably won't work, so we will build the same major version of OpenMPI. Here we will build OpenMPI v4.1.4 against the Slurm PMI-2 library (OpenFOAM image is built against OpenMPI v4.1.2).

Building OpenMPI Against the Slurm PMI-2 Library

To run the official OpenFOAM image in this environment, we build OpenMPI against the Slurm PMI-2 library and containerize it. Then, we'll copy that site-specific MPI inside the container image to the OpenFOAM image in a later step.

Create the pmi2-openmpi4-apptainer.def file with the following content. The OpenFOAM official image uses Ubuntu 22.04 as a base OS, so we use Ubuntu 22.04 as the base OS for OpenMPI container as well. (We will show you the Rocky Linux 8 version in later steps.)

Bootstrap: docker

From: ubuntu:22.04

%post

apt update

apt install -y build-essential

apt install -y wget hwloc libpmi2-0 libpmi2-0-dev

apt clean

rm -rf /var/lib/apt/lists/*

wget https://download.open-mpi.org/release/open-mpi/v4.1/openmpi-4.1.4.tar.gz

tar zxvf openmpi-4.1.4.tar.gz && rm openmpi-4.1.4.tar.gz

cd openmpi-4.1.4

./configure --with-hwloc=internal --prefix=/opt/openmpi/4.1.4 --with-slurm \

--with-pmi=/usr --with-pmi-libdir=/usr/lib/x86_64-linux-gnu

make -j $(nproc)

make install

Build this site-specific MPI image.

apptainer build pmi2-openmpi4-apptainer.sif pmi2-openmpi4-apptainer.def

Now we have the pmi2-openmpi4-apptainer.sif image that contains the site-specific MPI on /opt/openmpi/4.1.4 inside the container image. In the next step, we will copy this site-specific MPI to the official OpenFOAM image using “Multi-Stage Builds” feature.

Integrate Site-Specific MPI to the OpenFOAM Official Image and Swapping MPI

Create pmi2-openfoam2306-apptainer.def with the following content:

Bootstrap: localimage

From: pmi2-openmpi4-apptainer.sif

Stage: mpi

%post

# You can just eliminate this post section here

# I keep this section to clearly show you there are 2 stages in this def file.

Bootstrap: oras

From: docker.io/opencfd/openfoam-dev:2306-apptainer

Stage: runtime

%files from mpi

/opt/openmpi/4.1.4 /opt/openmpi/4.1.4

%post

apt update

apt install -y libpmi2-0

apt clean

rm -rf /var/lib/apt/lists/*

%post

echo 'export MPI_ARCH_PATH=/opt/openmpi/4.1.4' > \

$(bash /openfoam/assets/query.sh -show-prefix)/etc/config.sh/prefs.sys-openmpi

%runscript

exec /openfoam/run "$@"

This definition file has 2 stages: (1) mpi and (2) runtime. The first stage is using the MPI container we just built in the previous step. The second stage, in the files section, copies the directory /opt/openmpi/4.1.4 from the first stage to the second stage. Then, the necessary runtime library is installed to the second stage. Finally, we swap the MPI path from the original one to /opt/openmpi/4.1.4 using etc/config.sh/prefs.sys-openmpi configuration file under OpenFOAM application directory.

Build MPI swapped OpenFOAM image.

apptainer build pmi2-openfoam2306-apptainer.sif pmi2-openfoam2306-apptainer.def

Now your OpenFOAM image speaks PMI-2. Copy this image to one of your PATH locations.

cp pmi2-openfoam2306-apptainer.sif ~/bin

Running Tutorial Again with the Site-Specific MPI Integrated OpenFOAM Image via Slurm

Now it’s the time to retry the tutorial case that we failed to run in previous steps.

Change directory to tutorial case again.

cd openfoam/tutorials/incompressible/icoFoam/cavity/cavity

Run the tutorial with the site-specific MPI integrated OpenFOAM image via Slurm. (Please change partition to match your environment).

pmi2-openfoam2306-apptainer.sif blockMesh

pmi2-openfoam2306-apptainer.sif decomposePar -force

srun --mpi=pmi2 --ntasks=9 --tasks-per-node=9 \

--partition=opa \

pmi2-openfoam2306-apptainer.sif icoFoam -parallel

Now, the tutorial has successfully run. Let's move on to the motorBike tutorial!

Running motorBike Tutorial Using the Rocky Linux 8 Based OpenFOAM Image via Slurm

Before going to a more advanced example, build the Rocky Linux 8 based OpenFOAM image.

Build Rocky Linux 8 based OpenFOAM Image

Clone the OpenFOAM container repository.

git clone https://develop.openfoam.com/packaging/containers.git

Change the directory

cd containers/docker

Build the Rocky Linux 8-based OpenFOAM v2306 image

apptainer build openfoam2306.sif openfoam-run_rocky-template.def

Build Rocky Linux 8-based OpenMPI with Slurm PMI-2 Image

Create pmi2-ompi4.def with the following contents:

Bootstrap: docker

From: rockylinux/rockylinux:latest

%post

dnf -y group install "Development tools"

dnf -y install epel-release

crb enable

dnf -y install wget

dnf -y install hwloc slurm-pmi slurm-pmi-devel

dnf -y clean all

wget https://download.open-mpi.org/release/open-mpi/v4.1/openmpi-4.1.4.tar.gz

tar zxvf openmpi-4.1.4.tar.gz && rm openmpi-4.1.4.tar.gz

cd openmpi-4.1.4

./configure --with-hwloc=internal --prefix=/opt/openmpi/4.1.4 \

--with-slurm --with-pmi=/usr

make -j $(nproc)

make install

Build pmi2-ompi4.sif image

apptainer build pmi2-ompi4.sif pmi2-ompi4.def

Integrate Site-Specific MPI to the Rocky Linux 8-based OpenFOAM Image

Create pmi2-openfoam2306.def with the following content:

Bootstrap: localimage

From: pmi2-ompi4.sif

Stage: mpi

Bootstrap: localimage

From: openfoam2306.sif

Stage: runtime

%files from mpi

/opt/openmpi/4.1.4 /opt/openmpi/4.1.4

%post

dnf -y install slurm-pmi

dnf -y clean all

%post

echo 'export MPI_ARCH_PATH=/opt/openmpi/4.1.4' > $(bash /openfoam/assets/query.sh -show-prefix)/etc/config.sh/prefs.sys-openmpi

%runscript

exec /openfoam/run "$@"

Build pmi2-openfoam2306.sif image

apptainer build pmi2-openfoam2306.sif pmi2-openfoam2306.def

Move image to ~/bin

mv pmi2-openfoam2306.sif ~/bin

Running the motorBike Tutorial

Change the directory to the motorBike tutorial directory.

cd ~/openfoam/tutorials/incompressible/simpleFoam/motorBike

Change parallel run settings to 128 MPI processes.

cat << EOF > system/decomposeParDict

/*--------------------------------*- C++ -*----------------------------------*\

| ========= | |

| \\ / F ield | OpenFOAM: The Open Source CFD Toolbox |

| \\ / O peration | Version: 4.x |

| \\ / A nd | Web: www.OpenFOAM.org |

| \\/ M anipulation | |

\*---------------------------------------------------------------------------*/

FoamFile

{

version 2.0;

format ascii;

class dictionary;

note "mesh decomposition control dictionary";

object decomposeParDict;

}

// * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * * //

numberOfSubdomains 128;

method scotch;

EOF

The following is an example Slurm job script job.sh.

#!/bin/bash

#SBATCH --partition=opa

#SBATCH --nodes=4

#SBATCH --ntasks-per-node=32

mkdir -p constant/triSurface

cp ~/openfoam/tutorials/resources/geometry/motorBike.obj.gz constant/triSurface/

pmi2-openfoam2306.sif surfaceFeatureExtract

pmi2-openfoam2306.sif blockMesh

pmi2-openfoam2306.sif decomposePar

srun --mpi=pmi2 pmi2-openfoam2306.sif snappyHexMesh -parallel -overwrite

srun --mpi=pmi2 pmi2-openfoam2306.sif topoSet -parallel

ls -d processor* | xargs -I {} rm -rf ./{}/0

ls -d processor* | xargs -I {} cp -r 0.orig ./{}/0

srun --mpi=pmi2 pmi2-openfoam2306.sif patchSummary -parallel

srun --mpi=pmi2 pmi2-openfoam2306.sif potentialFoam -parallel -writephi

srun --mpi=pmi2 pmi2-openfoam2306.sif checkMesh -writeFields '(nonOrthoAngle)' -constant

srun --mpi=pmi2 pmi2-openfoam2306.sif simpleFoam -parallel

pmi2-openfoam2306.sif reconstructParMesh -constant

pmi2-openfoam2306.sif reconstructPar -latestTime

Submit job with sbatch

sbatch job.sh

Check job status

squeue

Running state

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

656 opa job.sh ciq R 0:03 4 c[5-8]

Check outputs

tail -f slurm-JOBID.out

Advanced Example: OpenMPI with Omni-Path Interconnect, UCX, and Slurm PMI-2

Image for OpenMPI Build against Omni-Path, UCX, and Slurm PMI-2

Create psm2-pmi2-ompi4.def

Bootstrap: docker

From: rockylinux/rockylinux:8

%post

dnf -y install wget git gcc gcc-c++ make file gcc-gfortran bzip2 \

dnf-plugins-core findutils librdmacm-devel epel-release

dnf -y group install "Development tools"

crb enable

dnf -y install hwloc slurm-pmi slurm-pmi-devel

dnf install -y libpsm2 libpsm2-devel numactl-devel

git clone https://github.com/openucx/ucx.git ucx

cd ucx

git checkout v1.10.1

./autogen.sh

mkdir build

cd build

../configure --prefix=/opt/ucx/1.10.1

make -j $(nproc)

make install

git clone --recurse-submodules -b v4.1.5 https://github.com/open-mpi/ompi.git

cd ompi

./autogen.pl

mkdir build

cd build

../configure --with-hwloc=internal --prefix=/opt/openmpi/4.1.5 \

--with-slurm --with-pmi=/usr --with-ucx=/opt/ucx/1.10.1

make -j $(nproc)

make install

Build the psm2-pmi2-ompi4.sif image

apptainer build psm2-pmi2-ompi4.sif psm2-pmi2-ompi4.def

Copy the image to ~/bin for a later step

cp psm2-pmi2-ompi4.sif ~/bin

Integrate Site-Specific MPI to OpenFOAM Pre-built Image

Create psm2-pmi2-openfoam2306.def with the following content:

Bootstrap: localimage

From: psm2-pmi2-ompi4.sif

Stage: mpi

Bootstrap: localimage

From: openfoam2306.sif

Stage: runtime

%files from mpi

/opt/openmpi/4.1.5 /opt/openmpi/4.1.5

/opt/ucx/1.10.1 /opt/ucx/1.10.1

%post

dnf -y install hwloc numactl slurm-pmi librdmacm libpsm2

dnf -y clean all

%post

echo 'export MPI_ARCH_PATH=/opt/openmpi/4.1.5' > \

$(bash /openfoam/assets/query.sh -show-prefix)/etc/config.sh/prefs.sys-openmpi

%runscript

exec /openfoam/run "$@"

Build psm2-pmi2-openfoam2306.sif image

apptainer build psm2-pmi2-openfoam2306.sif psm2-pmi2-openfoam2306.def

Move image to ~/bin

mv psm2-pmi2-openfoam2306.sif ~/bin

Now you can utilize the high-speed interconnect Omni-Path to run OpenFOAM tutorials with psm2-pmi2-openfoam2306.sif image via Slurm.

Slurm Job Script for a Fully Containerized Model

This is a sample job script for a fully containerized model that we verified on our Omni-Path test environment.

“Fully containerized model”

We do not need to install MPI libraries on the host system. Slurm can launch MPI applications and MPI application containers by speaking PMI-2.

#!/bin/bash

#SBATCH --partition=opa

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=32

# https://github.com/apptainer/apptainer/issues/769#issuecomment-1641428266

# https://github.com/apptainer/apptainer/issues/1583

apptainer instance start /home/ciq/bin/psm2-pmi2-openfoam2306.sif openfoam2306

#export OMPI_MCA_pml_ucx_verbose=100

export OMPI_MCA_pml=ucx

export OMPI_MCA_btl='^vader,tcp,openib,uct'

export UCX_LOG_LEVEL=info

export UCX_TLS=posix,sysv,cma,ib

export UCX_NET_DEVICES=hfi1_0:1

export SLURM_MPI_TYPE=pmi2

mkdir -p constant/triSurface

cp ~/openfoam/tutorials/resources/geometry/motorBike.obj.gz constant/triSurface/

apptainer run instance://openfoam2306 surfaceFeatureExtract

apptainer run instance://openfoam2306 blockMesh

apptainer run instance://openfoam2306 decomposePar

srun apptainer run instance://openfoam2306 snappyHexMesh -parallel -overwrite

srun apptainer run instance://openfoam2306 topoSet -parallel

ls -d processor* | xargs -I {} rm -rf ./{}/0

ls -d processor* | xargs -I {} cp -r 0.orig ./{}/0

srun apptainer run instance://openfoam2306 patchSummary -parallel

srun apptainer run instance://openfoam2306 potentialFoam -parallel -writephi

srun apptainer run instance://openfoam2306 checkMesh -writeFields '(nonOrthoAngle)' -constant

srun apptainer run instance://openfoam2306 simpleFoam -parallel

apptainer run instance://openfoam2306 reconstructParMesh -constant

apptainer run instance://openfoam2306 reconstructPar -latestTime

apptainer instance stop openfoam2306

Slurm Job Script for Hybrid Model

This is a sample job script for the hybrid model that we verified on our Omni-Path test environment.

“Hybrid model”

We need to install MPI libraries on the host system. We use mpirun and/or mpiexc to launch MPI applications and MPI application containers.

#!/bin/bash

#SBATCH --partition=opa

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=32

module load mpi/4.1.5/psm2-pmi2

# https://github.com/apptainer/apptainer/issues/769#issuecomment-1641428266

# https://github.com/apptainer/apptainer/issues/1583

apptainer instance start /home/ciq/bin/psm2-pmi2-openfoam2306.sif openfoam2306

#export OMPI_MCA_pml_ucx_verbose=100

export OMPI_MCA_pml=ucx

export OMPI_MCA_btl='^vader,tcp,openib,uct'

export UCX_LOG_LEVEL=info

export UCX_TLS=posix,sysv,cma,ib

export UCX_NET_DEVICES=hfi1_0:1

mkdir -p constant/triSurface

cp ~/openfoam/tutorials/resources/geometry/motorBike.obj.gz constant/triSurface/

apptainer run instance://openfoam2306 surfaceFeatureExtract

apptainer run instance://openfoam2306 blockMesh

apptainer run instance://openfoam2306 decomposePar

mpirun apptainer run instance://openfoam2306 snappyHexMesh -parallel -overwrite

mpirun apptainer run instance://openfoam2306 topoSet -parallel

ls -d processor* | xargs -I {} rm -rf ./{}/0

ls -d processor* | xargs -I {} cp -r 0.orig ./{}/0

mpirun apptainer run instance://openfoam2306 patchSummary -parallel

mpirun apptainer run instance://openfoam2306 potentialFoam -parallel -writephi

mpirun apptainer run instance://openfoam2306 checkMesh -writeFields '(nonOrthoAngle)' -constant

mpirun apptainer run instance://openfoam2306 simpleFoam -parallel

apptainer run instance://openfoam2306 reconstructParMesh -constant

apptainer run instance://openfoam2306 reconstructPar -latestTime

apptainer instance stop openfoam2306

Swapping Approach for Other MPI Applications

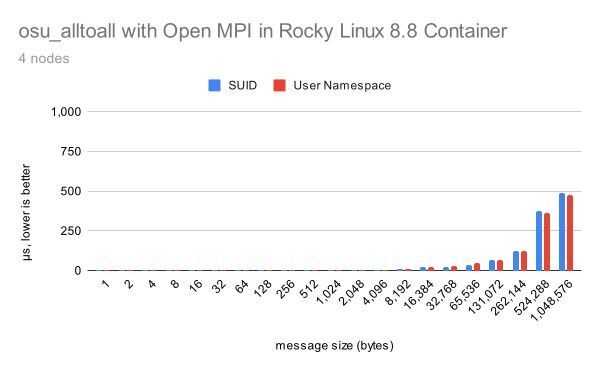

In this section, we will show you how the “swapping approach” works for other MPI applications to cover the broader use cases. We will use the Intel MPI Benchmark as an example.

Intel MPI Benchmark Container Built with Generic Configuration of OpenMPI

Create imb.def with the following content. This will build the Intel MPI Benchmark with generic configuration of OpenMPI. We will swap this MPI to site-specific MPI on later steps.

Bootstrap: docker

From: rockylinux/rockylinux:8

%post

dnf -y install wget git gcc gcc-c++ make file gcc-gfortran bzip2 \

dnf-plugins-core findutils librdmacm-devel epel-release

dnf -y group install "Development tools"

crb enable

%post

git clone --recurse-submodules -b v4.1.5 https://github.com/open-mpi/ompi.git

cd ompi

./autogen.pl

mkdir build

cd build

../configure --prefix=/opt/openmpi/4.1.5

make -j $(nproc)

make install

%post

cd /opt

git clone https://github.com/intel/mpi-benchmarks.git

cd mpi-benchmarks/src_c

export PATH=/opt/openmpi/4.1.5/bin:$PATH

make all

%runscript

/opt/mpi-benchmarks/src_c/IMB-MPI1 "$@"

Build imb.sif image

apptainer build imb.sif imb.def

Swapping MPI: Integrate Site-Specific MPI to the Intel MPI Benchmark Container

The test environment has Omni-Path interconnect. Luckily, we already have psm2-pmi2-ompi4.sif that we used to build site-specific MPI in the previous step. We will reuse this container here.

Create psm2-pmi2-imb.def file with the following content.

Bootstrap: localimage

From: /home/ciq/bin/psm2-pmi2-ompi4.sif

Stage: mpi

Bootstrap: localimage

From: imb.sif

Stage: imb

Bootstrap: docker

From: rockylinux/rockylinux:8

Stage: runtime

%files from mpi

/opt/openmpi/4.1.5 /opt/openmpi/4.1.5

/opt/ucx/1.10.1 /opt/ucx/1.10.1

%files from imb

/opt/mpi-benchmarks /opt/mpi-benchmarks

%post

dnf -y install epel-release

crb enable

dnf -y install hwloc numactl slurm-pmi librdmacm libpsm2

dnf -y clean all

%runscript

/opt/mpi-benchmarks/src_c/IMB-MPI1 "$@"

Build psm2-pmi2-imb.sif image

apptainer build psm2-pmi2-imb.sif psm2-pmi2-imb.def

Move image to ~/bin

mv psm2-pmi2-imb.sif ~/bin

Run Intel MPI Benchmark: Slurm Job Script for Fully Containerized Model

#!/bin/bash

#SBATCH --partition=opa

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=32

# https://github.com/apptainer/apptainer/issues/769#issuecomment-1641428266

# https://github.com/apptainer/apptainer/issues/1583

apptainer instance start /home/ciq/psm2-pmi2-imb.sif imb

#export OMPI_MCA_pml_ucx_verbose=100

export UCX_TLS=posix,sysv,cma,ib

export UCX_NET_DEVICES=hfi1_0:1

export UCX_LOG_LEVEL=info

export OMPI_MCA_pml=ucx

export OMPI_MCA_btl='^vader,tcp,openib,uct'

export SLURM_MPI_TYPE=pmi2

srun apptainer run instance://imb Alltoall

apptainer instance stop imb

Run Intel MPI Benchmark: Slurm Job Script for Hybrid Model

#!/bin/bash

#SBATCH --partition=opa

#SBATCH --nodes=1

#SBATCH --ntasks-per-node=32

module load mpi/4.1.5/psm2-pmi2

# https://github.com/apptainer/apptainer/issues/769#issuecomment-1641428266

# https://github.com/apptainer/apptainer/issues/1583

apptainer instance start /home/ciq/psm2-pmi2-imb.sif imb

#export OMPI_MCA_pml_ucx_verbose=100

export UCX_TLS=posix,sysv,cma,ib

export UCX_NET_DEVICES=hfi1_0:1

export UCX_LOG_LEVEL=info

export OMPI_MCA_pml=ucx

export OMPI_MCA_btl='^vader,tcp,openib,uct'

mpirun apptainer run instance://imb Alltoall

apptainer instance stop imb

Sample output

#----------------------------------------------------------------

# Intel(R) MPI Benchmarks 2018, MPI-1 part

#----------------------------------------------------------------

# Date : Tue Aug 1 17:42:00 2023

# Machine : x86_64

# System : Linux

# Release : 4.18.0-477.15.1.el8_8.x86_64

# Version : #1 SMP Wed Jun 28 15:04:18 UTC 2023

# MPI Version : 3.1

# MPI Thread Environment:

# Calling sequence was:

# /opt/mpi-benchmarks/src_c/IMB-MPI1 Alltoall

# Minimum message length in bytes: 0

# Maximum message length in bytes: 4194304

#

# MPI_Datatype : MPI_BYTE

# MPI_Datatype for reductions : MPI_FLOAT

# MPI_Op : MPI_SUM

Built for Scale. Chosen by the World’s Best.

1.4M+

Rocky Linux instances

Being used world wide

90%

Of fortune 100 companies

Use CIQ supported technologies

250k

Avg. monthly downloads

Rocky Linux