3 min read

Taming Cluster Deployment

The modern high performance computing cluster is a conglomeration of hardware and software components integrated to act like a single machine. With literally thousands of components that can all march at different speeds, the probability of integration problems quickly approaches 100%.

Cluster building was a dark art gathering of all the components, grabbing available software infrastructure tools, and usually filling in gaps with homegrown scripts. It was a trial and error process to integrate a working software stack that took advantage of underlying hardware. Incompatibilities were often found with cluster builders often diving into open source code trying to implement a change or hack that allowed components to work together. The process of delivering a system was usually measured in days or weeks.

The complexity of deploying a system resulted in many users inadvertently becoming cluster administrators and solution architects. These are people like scientists, researchers, engineers, who were setting out to be a domain expert in their respective fields. One day, the realization hits that their path led them away from their domain and it’s too late to go back. I at least identify with this crowd as I wound up in a place I did not expect to be, a builder of systems rather than a user of systems.

I’m happy with where I wound up in my journey, but it’s not a journey I want many people to make. We will always need people to focus on the system level improvements and issues, yet we don’t want too many experts leaving their field to join the ranks of solutions architects. We want domain specialists to be leaders in their domain. We want them to use clusters as a tool that lets them do great things.

CIQ, combined with our partners and literally thousands of open source contributors, are here to help accomplish this mission. We can tame cluster complexity to help these domain specialists do their things. And by doing so, we will live vicariously through their achievements…which could include something that one day even saves our lives.

The Cluster Software Stack

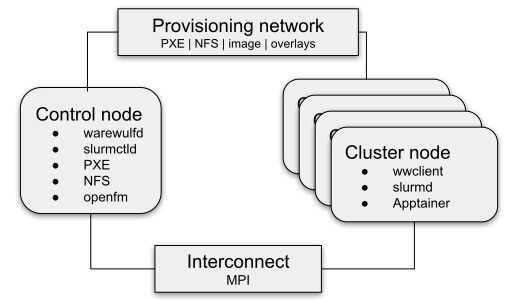

The key aggregation point of a high performance computing cluster is the software infrastructure or software stack that enables user applications. This stack includes the tooling that builds and installs the operating system, hardware drivers, and performance libraries on the compute nodes of a cluster. It also is responsible for configuring compute nodes to “act as one” from the user applications’ point of view. So, logically, the cluster software stack is where integration is at the forefront.

There can be a big difference between a functioning and well functioning cluster. A well designed software stack makes user applications sing while a poorly designed stack won’t function at all. If only it were actually a binary world, but we live in analog. What is arguably the worst case scenario is a cluster that functions with marginal performance. Is that broken? Not technically, but it’s like getting 100 miles of charge from your EV when you were supposed to get around 200. A well defined software stack must comprehend how driver level software works together, how hardware and software configurations impact performance, and how performance libraries must be combined with system software to provide those vendor-specific optimizations. It’s not just the laundry list of components, hardware and software, that make up a cluster. It’s as much about how those components are put together.

CIQ Cluster Software Stack Advantage

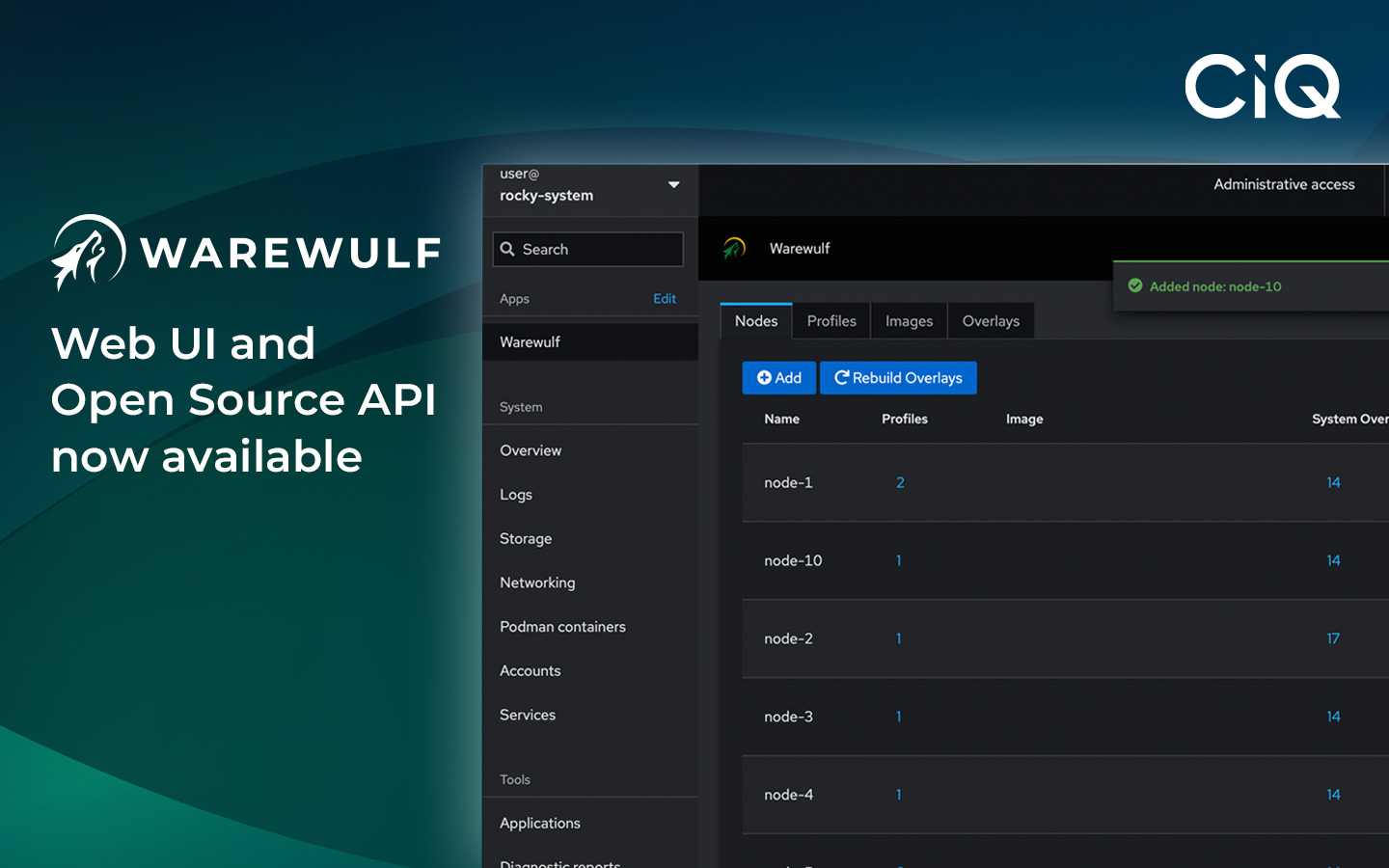

CIQ is in a strategic position to simplify the task of deploying HPC clusters. Our company has experts from across HPC, Linux, and the Enterprise environments. We are heavily involved in building and improving the Linux OS through Rocky Linux. We’re also drivers and contributors to Apptainer for containerization of applications and environments, as well as Warewulf for cluster provisioning infrastructure. We collaborate with the OpenHPC community to help curate the large amounts of open source software and libraries the HPC applications leverage, and we’re able to work with silicon and hardware vendors to integrate their special sauce for performance optimizations.

In essence, we empower cluster administrators and caretakers to be forward looking. CIQ takes care of the majority of integration effort to pull together a stable and well functioning software stack. This means better functioning systems out of the gate which also means less downtime. If users aren’t experiencing problems, there’s less day-to-day maintenance. That means IT staff have more time to focus on where users are going, managing improvements, planning ahead instead of just keeping things running. This could include deeper investigations into advanced architectures for their user applications, managing a larger number of systems, or analyzing user needs to identify cost of computing optimizations.

Deploying HPC clusters is no longer a dark art. CIQ helps tame the complexities in building a software stack for HPC and helps simplify deployment and use of powerful technologies needed across research and enterprise environments. We don’t want lots of IT specialists to have to learn HPC clustering to enable simulation. We want IT specialists to be experts in their domains of representing and taking care of their users.

Built for Scale. Chosen by the World’s Best.

1.4M+

Rocky Linux instances

Being used world wide

90%

Of fortune 100 companies

Use CIQ supported technologies

250k

Avg. monthly downloads

Rocky Linux