17 min read

Setup an AWS EKS Hybrid Kubernetes Cluster with Rocky Linux

Introduction

Amazon EKS Hybrid Nodes (EKS-H), is a groundbreaking feature that enables seamless integration of on-premises and edge infrastructure with Amazon Elastic Kubernetes Service (EKS) clusters. Launched at re:Invent 2024, this innovation significantly reduces operational complexity by allowing users to manage their Kubernetes control plane hosted on AWS while extending the familiar features of EKS clusters and tools to their on-premises environment..

In this blog post, we'll guide you through the process of setting up Amazon EKS Hybrid Nodes, effectively bridging your on-premises infrastructure with the power and flexibility of Amazon EKS. To maximize the benefits of this hybrid approach, we'll be leveraging Rocky Linux from CIQ. This combination offers the robust, community-driven foundation of Rocky Linux, enhanced with CIQ's enterprise-grade support, compliance features, and performance guarantees. By pairing Rocky Linux with EKS Hybrid Nodes, organizations can achieve a secure, efficient, and seamlessly integrated hybrid Kubernetes environment that meets the demands of modern, distributed applications.

Environment Explanation

Before diving in, let’s take a look at our environment architecture.

In our scenario we are using a bare-metal server in Vultr to simulate our on-premises environment. This connects to AWS via a VPN, which in our case is using Nebula. On the AWS side we have two c4.4xlarge compute nodes running Kubernetes 1.30 which are automatically provisioned by an Auto Scaling group. The diagram below shows a simple, high-level view of the environment with IP addressing.

Server Information

-

Node01 - Our server is set up with a public IPv4 address and a VPC2.0 10.254.0.3/20 network interface. We will configure kubelet to run on the internal IP address.

-

Lighthouse - Our EC2 VPN server is set up with a public IP address and is connected to the same VPC as our EKS servers. It also performs SNAT for packets exiting from Nebula into the AWS VPC

-

EKS Server 1/2 - These servers are auto provisioned with a private IP in the 10.128.0.0/16 address space.

Subnet Information

-

10.254.0.0/20 - This will act as our node network and will be what Node01 listens on. This is also the subnet that Nebula uses.

-

10.254.16.0/20 - This subnet will be used for our pods and the networking within kubernetes.

-

10.128.0.0/16 - This is our EKS subnet.

Prerequisites

In order to setup Amazon EKS Hybrid Nodes, ensure the following network access permissions are properly configured and open.

| Component | Type | Protocol | Direction | Port | Source | Destination | Used for |

|---|---|---|---|---|---|---|---|

| Cluster Security Group | HTTPS | TCP | Inbound | 443 | Remote Node CIDR(s) | - | Kubelet to Kubernetes API server |

| Cluster Security Group | HTTPS | TCP | Inbound | 443 | Remote Pod CIDR(s) | - | Pods requiring access to K8s API server such as Cilium and Calico. |

| Cluster Security Group | HTTPS | TCP | Outbound | 10250 | - | Remote Node CIDR(s) | Kubernetes API server to Kubelet |

| Cluster Security Group | HTTPS | TCP | Outbound | Webhook ports | - | Remote Pod CIDR(s) | Kubernetes API server to webhook |

| On-prem-AWS firewall | HTTPS | TCP | Outbound | 443 | Remote Node CIDR(s) | EKS X-ENI IPs | Kubelet to Kubernetes API server |

| On-prem-AWS firewall | HTTPS | TCP | Outbound | 443 | Remote Pod CIDR(s) | EKS X-ENI IPs | Pods to Kubernetes API server |

| On-prem-AWS firewall | HTTPS | TCP | Inbound | 10250 | EKS X-ENI IPs | Remote Node CIDR(s) | Kubernetes API server to Kubelet |

| On-prem-AWS firewall | HTTPS | TCP | Inbound | Webhook ports | EKS X-ENI IPs | Remote Pod CIDR(s) | Kubernetes API server to webhook |

| On-prem host to host | HTTPS | TCP/UDP | Inbound/Outbound | 53 | Remote Pod CIDR(s) | Remote Pod CIDR(s) | Pods to CoreDNS |

| On-prem host to host | User-defined | User-defined | Inbound/Outbound | App ports | Remote Pod CIDR(s) | Remote Pod CIDR(s) | Pods to Pods |

You may also need to configure additional network access rules for the CNI’s ports. In our scenario we will be using Cilium for our CNI, please read the Cilium documentation for more information.

EKS hybrid nodes use the AWS IAM Authenticator and temporary IAM credentials provisioned by AWS SSM or AWS IAM Roles Anywhere to authenticate with the EKS cluster. Similar to the EKS node IAM role, you will create a Hybrid Nodes IAM Role. In our setup, we used an IAM Roles.

We will be using the following CLI tools:

The kubectl commands in this guide assume usage of the default ~/.kube/config file. If you use a custom kubeconfig file, add --kubeconfig to each of your kubectl commands with the path to your custom kubeconfig file.

VPN Setup

The communication between the EKS control plane and hybrid nodes is routed through the VPC and subnets you pass during EKS cluster creation. There are several well-documented methods for connecting an on-premises network to an AWS VPC, including AWS Site-to-Site VPN and AWS Direct Connect. You can also use various software-based VPN solutions, such as LibreSwan or WireGuard; however, we opted for Nebula, a scalable, lightweight mesh VPN designed for secure and flexible network connections. The release engineering team at Rocky Linux maintains packages for Nebula in their repositories, which can be installed after installing the rocky-release-core package and enabling the core-infra repo using the dnf config-manager command or any other method.

We deployed a c5.large EC2 instance with a public IP address running Rocky Linux 9, configured to function as both a lighthouse and an exit node, enabling traffic routing into our EKS VPC. If you decide to use Nebula, be sure to add the appropriate VPC subnets to your EC2 server certificate during creation as well as the on-premises subnet to your local servers' certificate. In our setup, 10.254.16.0/20 via 10.254.0.3 is configured on the lighthouse server (with 10.254.0.0/20 already routed as part of the Nebula network), while 10.128.0.0/16 via 10.254.0.100 is configured on our on-premises server. You can follow the documentation for instructions on these settings. You can also check out their quick start guide for an overview of how to set up Nebula. NFTables was configured to pass all traffic and masquerade (SNAT) between on-premises and AWS VPC networks.

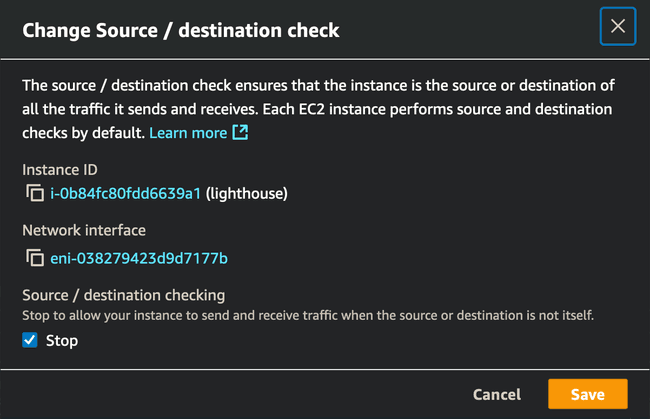

Whether you use Nebula or any other software based VPN, be sure to stop the "source/destination checking" on the instance. Disabling source/destination checks allows an EC2 instance to route traffic that isn't explicitly addressed to it, enabling NAT or routing functionality which is important for our EKS EC2 servers to be able to communicate with our on premise network. To stop this, select the EC2 instance that you intend to route VPN traffic. In the top right, select Actions > Networking > Change source/destination check. On the new window that pops up, select the button next to Stop.

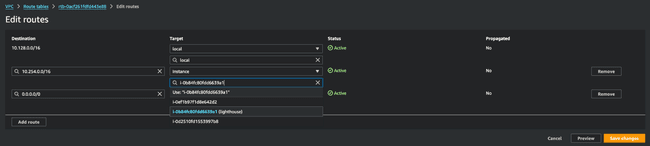

You will also need to ensure that the op premise subnets are added to the appropriate route table that is associated with your VPC. In our example, 10.254.0.0/16 is added as a Destination and the Target is our lighthouse Instance.

During our testing and conformance verification we encountered several http timeout errors that were related to MTU settings in the vpn. AWS has specific MTU settings that they use in their environment. You may need to change your settings as well if you experience issues.

Once your EKS EC2 instances are up and running, you should have connectivity between the instances and your on-premises network. To independently test this connectivity outside of your EKS instances, you can launch another Rocky Linux server within the same VPC and verify the connection.

AWS Setup

Prerequisites

All steps that use the AWS CLI assume you have the latest version installed and configured with credentials. See the AWS CLI documentation for more details. The EKS cluster CloudFormation template assumes you have a preexisting VPC and subnets that meet the EKS and hybrid nodes requirements. You can refer to the AWS Documentation for instructions on how to create this.

IAM Roles Anywhere

Download the IAM Roles Anywhere CloudFormation template

curl -OL 'https://raw.githubusercontent.com/aws/eks-hybrid/refs/heads/main/example/hybrid-ira-cfn.yaml'

Create a parameters.json file with your CA certificate body

You can use an existing CA but we will create a new one for our demo

openssl genrsa -out rootCA.key 3072

openssl req -x509 -nodes -sha256 -new -key rootCA.key -out rootCA.crt -days 731 \

-subj "/CN=Custom Root" \

-addext "keyUsage = critical, keyCertSign" \

-addext "basicConstraints = critical, CA:TRUE, pathlen:0" \

-addext "subjectKeyIdentifier = hash"

We can take our new rootCA.crt and create our parameters.json file. jq can be used to simplify the creation of this file.

jq -n --arg cert "$(cat rootCA.crt | sed '1,1d;$d' | tr -d '\n')" '{"Parameters": {"CABundleCert": $cert}}' > parameters.json

Deploy the IAM Roles Anywhere CloudFormation template

STACK_NAME=hybrid-beta-ira`

aws cloudformation deploy \

--stack-name ${STACK_NAME} \

--template-file hybrid-ira-cfn.yaml \

--parameter-overrides file://parameters.json \

--tags Stack=${STACK_NAME} App=hybrid-eks-testing \

--capabilities CAPABILITY_NAMED_IAM

Create EKS Cluster

Now that we have the CloudFormation template configured with our certificate authority, we can deploy a new hybrid EKS cluster. Full instructions can be found in the EKS Hybrid Nodes documentation.

Download the EKS cluster CloudFormation template

curl -OL 'https://raw.githubusercontent.com/aws/eks-hybrid/refs/heads/main/example/hybrid-eks-cfn.yaml'

Create Cluster with Cloudformation

We need to create a new file called cluster-parameters.json. Within this file you will need to replace <SUBNET1 ID>, <SUBNET2 ID>, <SG_ID>, <REMOTE NODE CIDRS>, <REMOTE POD CIDRS>, and <K8S_VERSION> with the appropriate data.

{

"Parameters": {

"ClusterName": "hybrid-eks-cluster",

"SubnetId1": "<SUBNET1_ID>",

"SubnetId2": "<SUBNET2_ID>",

"SecurityGroupId" "SG_ID",

"RemoteNodeCIDR": "<REMOTE_NODE_CIDRS>",

"RemotePodCIDR": "<REMOTE_POD_CIDRS>",

"ClusterAuthMode": "API_AND_CONFIG_MAP",

"K8sVersion": "K8S_VERSION"`

}

}

This is what our cluster-parameters.json file looks like for our environment.

{

"Parameters": {

"ClusterName": "hybrid-eks-cluster",

"VPCId": "vpc-[id]",

"SubnetId1": "subnet-[id1]",

"SubnetId2": "subnet-[id2]",

"RemoteNodeCIDR": "10.254.0.0/20",

"RemotePodCIDR": "10.254.16.0/20"

}

}

Now that we have our cluster parameters, we can deploy a new cluster.

STACK_NAME=hybrid-beta-eks-cluster

aws cloudformation deploy \

--stack-name ${STACK_NAME} \

--template-file hybrid-eks-cfn.yaml \

--parameter-overrides file://cluster-parameters.json \

--tags Stack=${STACK_NAME} App=hybrid-eks-testing \

--capabilities CAPABILITY_NAMED_IAM

Check Cluster Status

To observe the status of your cluster creation, you can view your cluster in the EKS console.

Prepare EKS Cluster

Once your EKS cluster is Active, you need to update the VPC CNI with anti-affinity for hybrid nodes and add your Hybrid Nodes IAM Role to Kubernetes Role-Based Access Control (RBAC) in the aws-auth ConfigMap. Before continuing we will use a couple commands and create several files that rely on the region. We have chosen us-west-2, please use whichever region you prefer.

Update local kubeconfig for your EKS cluster

Use aws eks update-kubeconfig to configure your kubeconfig for your cluster. By default, the configuration file is created at the default .kube folder in your home directory or merged with an existing config file at that location. You can specify another path with the --kubeconfig option. If you do use the --kubeconfig option, you must use that kubeconfig file in all subsequent kubectl commands.

aws eks update-kubeconfig --region us-west-2 --name hybrid-eks-cluster

Update VPC CNI with anti-affinity for hybrid nodes

The VPC CNI is not supported to run on hybrid nodes as it relies on VPC resources to configure the interfaces for pods that run on EC2 nodes. We will update the VPC CNI with anti-affinity for nodes labeled with the default hybrid nodes label eks.amazonaws.com/compute-type: hybrid.

Map Hybrid Nodes IAM Role in Kubernetes RBAC

The Hybrid Nodes IAM Role needs to be mapped to a Kubernetes group with sufficient node permissions for hybrid nodes to be able to join the EKS cluster. In order to allow access through aws-auth ConfigMap, the EKS cluster must be configured with API_AND_CONFIG_MAP authentication mode. This is the default in the cluster-parameters.json, which we specified above.

We will need the rolearn in the next steps. We can retrieve this by running this command.

aws cloudformation describe-stacks \

--stack-name hybrid-beta-ira \

` --query 'Stacks[].Outputs[?OutputKey==`IRARoleARN`].[OutputValue]' \ `

--output text

Check to see if you have an existing aws-auth ConfigMap for your cluster.

kubectl describe configmap -n kube-system aws-auth

If you do not have an existing aws-auth ConfigMap for your cluster, we can create it with the following command.

Note

{{SessionName}} is the correct template formatting to save in the ConfigMap, do not replace it with other values.

kubectl apply -f=/dev/stdin <<-EOF

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

`mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: <ARN of the Hybrid Nodes IAM Role>

username: system:node:{{SessionName}}

EOF

If you do have an existing aws-auth ConfigMap for your cluster, we can edit this to add the Hybrid Nodes IAM Role.

kubectl edit -n kube-system configmap/aws-auth

data:

mapRoles: |

- groups:

- system:bootstrappers

- system:nodes

rolearn: <ARN of the Hybrid Nodes IAM Role>

username: system:node:{{SessionName}}

On-Prem Setup

Now that we have EKS setup we can focus on our on-premise server. For our environment we did disable SELinux.

Prepare Node Certificates

To use IAM Roles Anywhere for authenticating hybrid nodes, you must have certificates and keys signed by the rootCA created during the IAM Roles Anywhere setup. These certificates and keys can be generated on any machine but must be present on your hybrid nodes before bootstrapping them into your EKS cluster. Each hybrid node requires a unique certificate and key placed in the following location:

Place the certificate at /etc/iam/pki/server.pem.

Place the key at /etc/iam/pki/server.key.

If these directories don’t exist, create them using:

sudo mkdir -p /etc/iam/pki

We can now generate a new certificate with the following openssl commands.

openssl ecparam -genkey -name secp384r1 -out node01.key

openssl req -new -sha512 -key node01.key -out node01.csr -subj "/C=US/O=AWS/CN=node01/OU=YourOrganization"

openssl x509 -req -sha512 -days 365 -in node01.csr -CA rootCA.crt -CAkey rootCA.key -CAcreateserial -out node01.pem -extfile <(printf "basicConstraints=critical,CA:FALSE\nkeyUsage=critical,digitalSignature")

This will create a new certificate with the name of node01. This name must be used in a later step as the nodeName in your nodeConfig.yaml that will be used when bootstrapping your nodes. Before moving or copying these certificates we can verify that they were generated correctly.

openssl verify -verbose -CAfile rootCA.crt node01.pem`

If the certificate is valid you should see node01.pem: OK. You can now copy or move the certificates to the appropriate directory.

cp node01.pem /etc/iam/pki/server.pem

cp node01.key /etc/iam/pki/server.key

Connect Hybrid Nodes

Install nodeadm

Now that we have all the certificates in place we can install nodeadm. This tool will install the necessary dependencies as well as connect the server to the EKS cluster.

curl -OL 'https://hybrid-assets.eks.amazonaws.com/releases/latest/bin/linux/amd64/nodeadm’

We need to make sure that nodeadm is executable

chmod +x nodeadm

We also need to install containerd which we will be downloading from the docker community repo as well as a couple other necessary packages.

dnf install -y yum-utils device-mapper-persistent-data lvm2

dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

dnf update -y && dnf install -y containerd.io

Install Hybrid Node Dependencies

Now that we have the nodeadm tool downloaded and all the necessary packages, we can now install the EKS dependencies onto the system

./nodeadm install 1.30 --credential-provider iam-ra

This will install kubelet, kubectl, and SSM / IAM Roles Anywhere components. You should see an output resembling the following

{"level":"info","ts":...,"caller":"...","msg":"Loading configuration","configSource":"file://nodeConfig.yaml"}

{"level":"info","ts":...,"caller":"...","msg":"Validating configuration"}

{"level":"info","ts":...,"caller":"...","msg":"Validating Kubernetes version","kubernetes version":"1.30"}

{"level":"info","ts":...,"caller":"...","msg":"Using Kubernetes version","kubernetes version":"1.30.0"}

{"level":"info","ts":...,"caller":"...","msg":"Installing SSM agent installer..."}

{"level":"info","ts":...,"caller":"...","msg":"Installing kubelet..."}

{"level":"info","ts":...,"caller":"...","msg":"Installing kubectl..."}

{"level":"info","ts":...,"caller":"...","msg":"Installing cni-plugins..."}

{"level":"info","ts":...,"caller":"...","msg":"Installing image credential provider..."}

{"level":"info","ts":...,"caller":"...","msg":"Installing IAM authenticator..."}

{"level":"info","ts":...,"caller":"...","msg":"Finishing up install..."}

Before we continue, in our environment we need to change what IP address our servers listen on. Because we are using a server within Vultr, it was provisioned with both a public IP and a private IP. By default, kubelet chose to listen on the public IP. We can change this behavior by editing /etc/systemd/system/kubelet.service and adding, in our case, --node-ip=10.254.0.3. Our unit file looks like this after the edit.

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=containerd.service

Requires=containerd.service

[Service]

Slice=runtime.slice

EnvironmentFile=/etc/eks/kubelet/environment

ExecStartPre=/sbin/iptables -P FORWARD ACCEPT -w 5

ExecStart=/usr/bin/kubelet \

--config /etc/kubernetes/kubelet/config.json \

--kubeconfig /var/lib/kubelet/kubeconfig \

--container-runtime-endpoint unix:///run/containerd/containerd.sock \

--node-ip=10.254.0.3 \

$NODEADM_KUBELET_ARGS\

$KUBELET_EXTRA_ARGS

Restart=on-failure

RestartForceExitStatus=SIGPIPE

RestartSec=5

KillMode=process

CPUAccounting=true

MemoryAccounting=true

[Install]

WantedBy=multi-user.target

Once we save this, we can run systemctl daemon-reload to refresh systemd and then systemctl restart kubelet.

Connect Hybrid Nodes to Your EKS Cluster

We now need to create a nodeConfig.yaml file that will provide nodeadm with all the details to connect to the cluster.

apiVersion: node.eks.aws/v1alpha1

kind: NodeConfig

spec:

cluster:

name: hybrid-eks-cluster

region: us-west-2

hybrid:

nodeName: node01

iamRolesAnywhere:

trustAnchorArn: <trust-anchor-arn>

profileArn: <profile-arn>

roleArn: <arn:aws:iam::<ACCOUNT>:role/hybrid-beta-ira-intermediate-role>

assumeRoleArn: <arn:aws:iam::<ACCOUNT>:role/hybrid-beta-ira-nodes>

Some notes on the fields in nodeConfig.yaml:

-

nodeName: This must match the name chosen in the Prepare Node Certificates step from earlier. In our case node01.

-

trustAnchorArn: You can retrieve the trust anchor ARN with the following:

aws cloudformation describe-stacks --stack-name hybrid-beta-ira --query 'Stacks[0].Outputs[?OutputKey==`IRATrustAnchorARN`].OutputValue' --output text

- profileArn: You can retrieve the profile ARN with the following:

aws cloudformation describe-stacks --stack-name hybrid-beta-ira --query 'Stacks[0].Outputs[?OutputKey==`IRAProfileARN`].OutputValue' --output text

Your <ACCOUNT> ID will displayed with the previous commands after arn:aws:rolesanywhere:us-west-2:

Now we can connect our hybrid node to the EKS cluster!

sudo ./nodeadm init -c file://nodeConfig.yaml

If this command completes successfully your node has joined the cluster! You can verify this within the compute tab within the EKS console or by running kubectl get nodes

NAME STATUS ROLES AGE VERSION

ip-10-128-65-75.us-west-2.compute.internal Ready <none> 20d v1.30.4-eks-a737599

ip-10-128-86-104.us-west-2.compute.internal Ready <none> 20d v1.30.4-eks-a737599

node01 NotReady <none> 21d v1.30.0-eks-036c24

Note:

Your nodes will have status NotReady, which is expected and is due to the lack of a CNI

Configure CNI

We are going to install Cilium for our CNI, however Calico is also available.

Install Cilium

To install cilium via Helm, we first need to add the Cilium Helm chart repository to our client

helm repo add cilium https://helm.cilium.io/

Before we can install cilium we need to create a cilium-values.yaml file to pass the appropriate configuration to Cilium.

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: eks.amazonaws.com/compute-type

operator: In

values:

- hybrid

ipam:

mode: cluster-pool

operator:

clusterPoolIPv4MaskSize: 25

clusterPoolIPv4PodCIDRList:

- 10.254.16.0/20

operator:

unmanagedPodWatcher:

restart: false

The nodeAffinity configures Cilium to only run on the hybrid nodes. As such, we are only configuring our hybrid node IPv4 CIDR (10.254.16.0/20) under clusterPoolIPv4PodCIDRList. You can configure clusterPoolIPv4MaskSize based on your required pods per node as noted in the Cilium docs.

Now that this file is created we can install Cilium into our cluster

helm install cilium cilium/cilium --version 1.15.6 --namespace kube-system --values cilium-values.yaml

During our testing and validation we ran into an issue with Calico and session affinity not being enabled. As a result we had to add --enable-session-affinity via kubectl edit daemonset cilium -n kube-system under the container arguments.

# Additional lines omitted

[...]

containers:

- args:

- --config-dir=/tmp/cilium/config-map

- --enable-session-affinity

command:

- cilium-agent

[...]

Install the Emissary Ingress Controller

For our ingress, we will be installing Emissary

Install Emissary

We will first add the Helm chart repository to our client

helm repo add datawire https://app.getambassador.io

helm repo update

Now we can create a new namespace for emissary and install

kubectl create namespace emissary

kubectl apply -f https://app.getambassador.io/yaml/emissary/3.9.1/emissary-crds.yaml

kubectl wait --timeout=90s --for=condition=available deployment emissary-apiext -n emissary-system

helm install emissary-ingress --namespace emissary datawire/emissary-ingress && \

kubectl -n emissary wait \

--for condition=available \

--timeout=90s deploy \

-lapp.kubernetes.io/instance=emissary-ingress

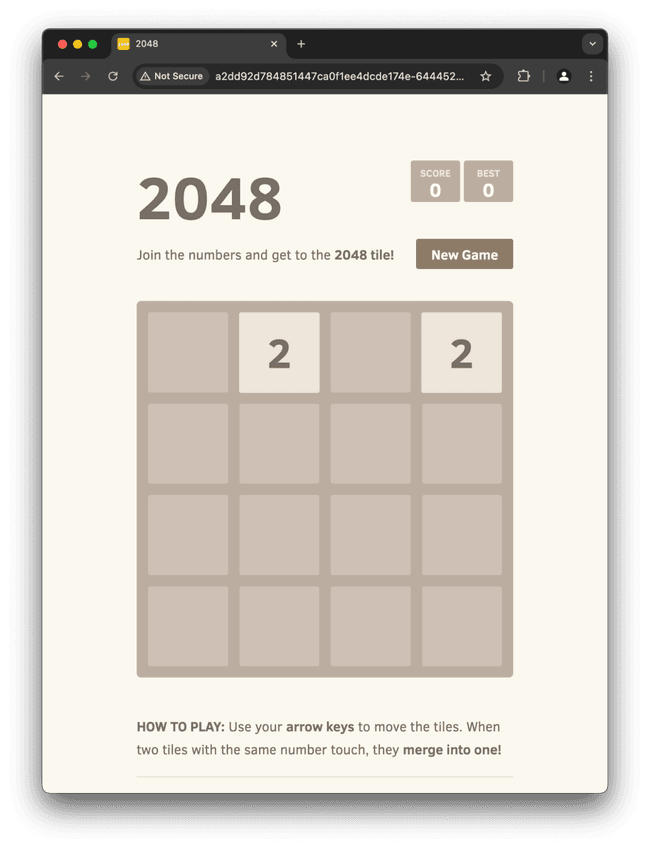

Verifying Our Cluster

We have installed everything we need and can move on to testing and verifying that our new hybrid cluster is working as intended! We'll deploy a pod, make sure it's running on node01, and then we will provision a load balancer within AWS to show that communication is possible between AWS and on-premises networks.

Creating a Pod

We are going to deploy a game of 2048 from gabrielecirulli/2048 using the container from alexwhen/docker-2048. Here is our definition file.

apiVersion: v1

kind: Pod

metadata:

labels:

app: example-game

name: example-game

namespace: default

spec:

nodeName: node01

containers:

- image: docker.io/alexwhen/docker-2048:latest

imagePullPolicy: IfNotPresent

name: example-game

ports:

- containerPort: 80

name: http

protocol: TCP

We can create this pod with kubectl create -f example-game.yaml and we can see it is up and running on our hybrid node.

`# kubectl get pod -o wide`

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

example-game 1/1 Running 0 26s 10.254.16.156 node01 <none> <none>

Creating a LoadBalancer

Next we will create a new load balancer within AWS to publicly expose our pod over the internet.

apiVersion: v1

kind: Service

metadata:

name: example-game-lb

namespace: default

labels:

app: example-game

spec:

type: LoadBalancer

selector:

app: example-game

ports:

- port: 80

targetPort: 80

We will create the new service with kubectl apply -f example-game-lb.yaml and we can verify the load balancer was successfully created!

# kubectl get svc -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

example-game-lb LoadBalancer 172.20.214.114 a2dd92d784851447ca0f1ee4dcde174e-644452537.us-west-2.elb.amazonaws.com 80:30626/TCP 71s app=example-game

Now, we can visit the external-ip url in our browser and enjoy our game!

Conformance Testing

If you want to go one step further and verify the conformance of your cluster, you can do so with hydrophone. Once you download the tool you can run the test with hydrophone --conformance. This test can take several hours to complete, but once its finished you should see an output similar to this

Ran 402 of 7199 Specs in 6495.253 seconds

SUCCESS! -- 402 Passed | 0 Failed | 0 Pending | 6797 Skipped

PASS

Ginkgo ran 1 suite in 1h48m15.940294477s

Test Suite Passed

If you do see an error similar to "should serve endpoints on same port and different protocols", note that this is a known issue with Cilium and should be fixed in the upcoming 1.17 release.

Conclusion

This guide has walked you through the process of setting up an EKS Hybrid Kubernetes Cluster with Rocky Linux, bridging on-premises infrastructure with Amazon EKS. By following these steps, you've created a flexible, scalable, and secure hybrid environment that offers the best of both worlds: the control of on-premises systems and the scalability of cloud services. This hybrid approach positions you well to meet the evolving demands of modern, distributed applications while maintaining flexibility in your infrastructure choices.

With Rocky Linux from CIQ, you can run the same OS with enterprise assurance across your entire infrastructure no matter where it is deployed: in your AWS Cloud account, in your on-premises datacenter, and at the edge both in virtual machines or bare metal instances. Rocky Linux from CIQ is also available for container applications. With software supply chain validation, repository mirrors operated by CIQ and hosted on AWS, and limited indemnification available you can run your fully RHEL-compatible environment at an optimized infrastructure cost. CIQ also offers optional enterprise support agreements which let you access our expert Enterprise Linux engineers for assistance with operating issues you might experience in your application.

Visit us at ciq.com for more information, or contact us for a demo!

Built for Scale. Chosen by the World’s Best.

1.4M+

Rocky Linux instances

Being used world wide

90%

Of fortune 100 companies

Use CIQ supported technologies

250k

Avg. monthly downloads

Rocky Linux