9 min read

SMS foundation: The traditional OpenHPC way

Your System Management Server is the brain, nervous system, and beating heart of your HPC cluster, all rolled into one. Get it wrong and you'll be fighting configuration gremlins for years. Get it right and everything downstream becomes manageable. Not-so-fun fact: most cluster deployment failures trace back to SMS configuration mistakes made in the first two hours.

This installment is where we stop hand-waving and start CLI-ing. We're going to walk through the traditional OpenHPC deployment approach - similar to the one documented in the official installation guides, the one running on thousands of production clusters worldwide. This is the manual, config-file-editing, SSH-into-the-server method that HPC administrators have relied on for years.

And yes, it works. Brilliantly, even. But by the time we're done here, you'll understand exactly why experienced administrators are increasingly looking for alternatives.

Before we begin: what you'll need

To follow along hands-on with this post, you'll need at minimum:

For SMS configuration practice:

- 1 system with Rocky Linux 9 (VM or physical: 4 CPU cores, 8GB RAM, 100GB disk)

- 2 network interfaces (can be virtual NICs in VM environment)

- Purpose: Complete this entire post, understand SMS configuration fundamentals

Network setup:

- First interface: Management network (external connectivity)

- Second interface: Provisioning network (will be 192.168.1.0/24 private)

Optional for full experience:

- 2-3 compute node VMs (2 cores, 4GB RAM each) for later posts

- All systems on same virtual network with PXE boot enabled

Virtualization note: VirtualBox, VMware, or KVM all work well. Create an "Internal Network" or isolated virtual network for the provisioning interface to avoid conflicts with your broader network infrastructure.

Phase 1: The foundation

Starting with Rocky Linux 9

We're using Rocky Linux 9 because OpenHPC 3.x supports it today. Rocky Linux 10 arrived in June 2025, but OpenHPC 4.x support is still in the future. Rocky 9 gives you enterprise-grade stability with support through May 2032 - plenty of runway for your cluster's operational lifetime.

Start with a minimal server installation (NO Graphical Desktop Environment). During installation, configure your first network interface for external access and set a strong root password. We'll handle everything else after boot.

# First things first: update everything

dnf update -y

# Verify you're running Rocky 9

cat /etc/rocky-release

# Set your hostname - ***this is important***

hostnamectl set-hostname sms.cluster.local

# Verify it took

hostnamectl

That hostname isn't optional. Slurm uses it for controller identification, Warewulf uses it for provisioning, and typos here will haunt you for hours when things mysteriously fail to communicate.

Network architecture: Two networks, two purposes

Your SMS needs at least two network interfaces. Understanding why requires thinking about what your cluster actually does.

Management network (eth0/eno1): Your cluster's connection to civilization. Users SSH in here. Software updates download here. You administer the cluster remotely through here. This needs a routable/reachable IP address on your LAN.

Provisioning or internal network (eth1/eno2): Your cluster's private internal world. Compute nodes PXE boot here. DHCP runs here. Node-to-SMS communication happens here. This is typically an isolated private subnet that doesn't route to or see the outside world - usually something like 192.168.1.0/24, 172.16.0.0/12, 10.0.0.0/8 .

Rocky Linux 9 uses NetworkManager for network configuration. Here's how to set up your internal provisioning network:

# Define your internal network

export SMS_ETH_INTERNAL=eno2

export SMS_IP=192.168.1.1

export INTERNAL_NETMASK=255.255.255.0

# Check current connections

nmcli con show

# Create connection for internal network

nmcli con add type ethernet \

con-name cluster-internal \

ifname ${SMS_ETH_INTERNAL} \

ipv4.addresses ${SMS_IP}/24 \

ipv4.method manual \

connection.autoconnect yes

# Bring it up

nmcli con up cluster-internal

# Verify

ip addr show ${SMS_ETH_INTERNAL}

ping -c 3 ${SMS_IP}

Gotcha #1: Interface configured but not responding? Check that it's actually up and set to autoconnect. Run nmcli con show cluster-internal and verify connection.autoconnect: yes.

Enabling OpenHPC repositories

OpenHPC makes this part straightforward - a single RPM configures everything:

# Install OpenHPC 3.x repository

dnf install -y \

http://repos.openhpc.community/OpenHPC/3/EL_9/x86_64/ohpc-release-3-1.el9.x86_64.rpm

# Add EPEL (Extra Packages for Enterprise Linux)

dnf install -y epel-release

# Enable CodeReady Builder (CRB)

dnf config-manager --set-enabled crb

# Verify everything is enabled

dnf repolist | grep -i openhpc

dnf repolist | grep -i epel

dnf repolist | grep -i crb

What just happened? The ohpc-release package added repository definitions to /etc/yum.repos.d/ and imported GPG signing keys. EPEL provides dependencies OpenHPC needs. CRB supplies development headers and libraries.

Gotcha #2: Forgetting CRB causes weird dependency failures later when installing scientific libraries. Missing -devel packages? You skipped this step.

Now install the base:

# Install OpenHPC base meta-package

dnf install -y ohpc-base

# Verify installation

rpm -qa | grep ohpc | wc -l

# Should see 50+ packages

# Check Lmod (environment modules) works

module --version

The ohpc-base package pulls in Lmod (the environment module system), documentation, and utilities. This is your foundation.

Phase 2: Manual configuration

Now comes the real work—making architectural decisions and hand-editing configuration files. Every choice you make here will affect your cluster's operation over its useful life.

Decision point 1: Warewulf or xCAT?

You need a provisioning system to deploy OS images to compute nodes. OpenHPC supports two:

Warewulf pioneered stateless provisioning 20+ years ago. Nodes boot from the network, load their OS into RAM, and run entirely from memory. Simple, focused, perfect for homogeneous clusters. This is what 80% of OpenHPC deployments use.

xCAT offers more flexibility for heterogeneous environments with different hardware types and operating systems. More features, steeper learning curve, more configuration complexity.

Unless you have specific requirements demanding xCAT's flexibility, choose Warewulf. Trust us - we know a thing or 2 about Warewulf!

# Install Warewulf server components

dnf install -y warewulf-ohpc

# Verify installation

wwctl --version

# Initialize Warewulf

wwctl configure --all

This installed and configured DHCP, TFTP, HTTP, and the Warewulf database. All the services that will PXE boot and provision your compute nodes.

Decision point 2: Slurm or PBS Professional?

Resource managers decide which jobs run on which nodes when. OpenHPC supports two:

Slurm (Simple Linux Utility for Resource Management) is open source, powers the majority of the 500 most powerful non-distributed computer systems in the world (TOP500), and costs nothing. Active community, extensive documentation. Natural choice for academic and research environments.

PBS Professional offers commercial support, SLAs, and enterprise features. Better for organizations requiring vendor backing.

We're using Slurm for our walk-through:

# Install Slurm server components

dnf install -y ohpc-slurm-server

# This installs slurmctld (controller), slurmdbd (database daemon) and all the utilities you'll need

# Verify

rpm -qa | grep slurm-ohpc

slurmctld -V

Configuring the supporting cast

Before Slurm can work, you need supporting services configured. Grunt work part Deux.

Time synchronization:

# Install chrony (probably already installed)

dnf install -y chrony

# Allow internal network to sync from SMS

echo "allow 192.168.1.0/24" >> /etc/chrony.conf

# Start and enable

systemctl enable chronyd

systemctl start chronyd

# Verify

chronyc sources -v

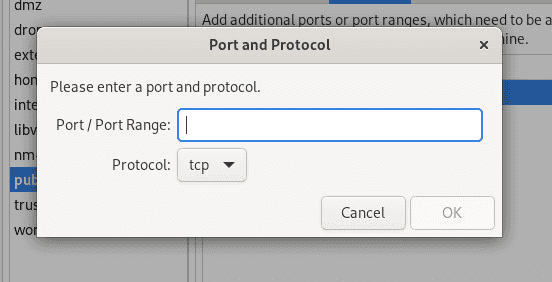

Firewall configuration:

OpenHPC guides assume disabled firewall because your cluster lives behind external protection. For testing, disable it. For production, be more deliberate by opening needed ports (firewalls are not a bad idea):

# Testing: disable completely

systemctl stop firewalld

systemctl disable firewalld

# Production: open required ports

firewall-cmd --permanent --add-service=dhcp

firewall-cmd --permanent --add-service=tftp

firewall-cmd --permanent --add-service=http

firewall-cmd --permanent --add-service=nfs

firewall-cmd --permanent --add-port=6817/tcp # Slurm slurmctld

firewall-cmd --permanent --add-port=6818/tcp # Slurm slurmd

firewall-cmd --reload

Gotcha #3: Mysterious service failures? Disable firewall temporarily to rule it out. Error messages rarely mention that firewall rules are blocking things.

NFS exports:

Compute nodes need shared directories. We'll use /home for user files and /opt/ohpc/pub for OpenHPC specific software:

# Install NFS server

dnf install -y nfs-utils

# Create/verify directories

mkdir -p /home

mkdir -p /opt/ohpc/pub

# Edit /etc/exports

cat >> /etc/exports << 'EOF'

/home 192.168.1.0/24(rw,sync,no_subtree_check,no_root_squash)

/opt/ohpc/pub 192.168.1.0/24(ro,sync,no_subtree_check)

EOF

# Enable and start NFS

systemctl enable nfs-server

systemctl start nfs-server

# Export shares

exportfs -a

# Verify

showmount -e localhost

SELinux:

SELinux provides security but can block legitimate cluster operations in non-obvious ways. OpenHPC guides recommend permissive mode:

# Check status

getenforce

# Set to permissive temporarily

setenforce 0

# Set permanently

sed -i 's/^SELINUX=.*/SELINUX=permissive/' /etc/selinux/config

Gotcha #4: SELinux in enforcing mode silently blocks DHCP or TFTP with no clear error messages. When troubleshooting mysterious failures, try setenforce 0 first.

Configuring Slurm

Now we configure Slurm itself. This involves editing configuration files, setting permissions, generating keys, and praying you didn't make a typo OR worse we published a typo !!

# Copy OpenHPC template

cp /etc/slurm/slurm.conf.ohpc /etc/slurm/slurm.conf

# Set SMS hostname in slurm.conf

export SMS_NAME=$(hostname -s)

perl -pi -e "s/ControlMachine=\S+/ControlMachine=${SMS_NAME}/" \

/etc/slurm/slurm.conf

# Create required directories with correct permissions

mkdir -p /var/spool/slurm/ctld

mkdir -p /var/log/slurm

chown slurm:slurm /var/spool/slurm/ctld

chown slurm:slurm /var/log/slurm

Every node needs the same Munge authentication key:

# Generate munge key

create-munge-key -r

# Or manually:

dd if=/dev/urandom bs=1 count=1024 > /etc/munge/munge.key

# Set permissions (critical!)

chown munge:munge /etc/munge/munge.key

chmod 400 /etc/munge/munge.key

# Start munge

systemctl enable munge

systemctl start munge

# Test

munge -n | unmunge

Setting up accounting (optional but recommended):

Slurm accounting requires a database. Let's set up MariaDB:

# Install MariaDB

dnf install -y mariadb-server

# Start and enable

systemctl enable mariadb

systemctl start mariadb

# Secure it

mysql_secure_installation

# Create Slurm database

mysql -u root -p << 'EOF'

CREATE DATABASE slurm_acct_db;

CREATE USER 'slurm'@'localhost' IDENTIFIED BY 'slurmdbpass';

GRANT ALL ON slurm_acct_db.* TO 'slurm'@'localhost';

FLUSH PRIVILEGES;

EOF

Now create /etc/slurm/slurmdbd.conf:

cat > /etc/slurm/slurmdbd.conf << 'EOF'

AuthType=auth/munge

DbdHost=localhost

StorageType=accounting_storage/mysql

StorageHost=localhost

StorageUser=slurm

StoragePass=slurmdbpass

StorageLoc=slurm_acct_db

LogFile=/var/log/slurm/slurmdbd.log

PidFile=/var/run/slurmdbd.pid

SlurmUser=slurm

EOF

# Set permissions

chmod 600 /etc/slurm/slurmdbd.conf

chown slurm:slurm /etc/slurm/slurmdbd.conf

Add accounting to slurm.conf:

cat >> /etc/slurm/slurm.conf << 'EOF'

# Accounting

AccountingStorageType=accounting_storage/slurmdbd

AccountingStorageHost=localhost

AccountingStoragePort=6819

AccountingStorageEnforce=associations,limits,qos

JobAcctGatherType=jobacct_gather/linux

JobAcctGatherFrequency=30

EOF

Finally, start the services in the correct order:

# Start database daemon FIRST

systemctl enable slurmdbd

systemctl start slurmdbd

# Wait for it to initialize

sleep 5

# Then start controller

systemctl enable slurmctld

systemctl start slurmctld

# Test

scontrol ping

sinfo

Gotcha #5: Starting slurmctld before slurmdbd causes controller to fail. Order matters.

Validation: Did it actually work?

Before moving forward, verify everything:

# Network connectivity

ip addr show

ping -c 3 ${SMS_IP}

# Repository access

dnf search ohpc | head -5

# Service status

systemctl is-active chronyd nfs-server munge slurmctld slurmdbd dhcpd

# Environment modules

module avail

module load gnu12

module list

If everything checks out, congratulations - your SMS is configured. You rock!

The reality check

Let's inventory what you just did over the past 2-4 hours:

Configuration points touched:

- 2 network interfaces configured

- 3 repositories enabled

- 50+ packages installed

- 6+ configuration files edited manually

- 8 services started and enabled

- 4 directories created with specific permissions

- 1 authentication key generated

- 1 database created and configured

- Multiple restart/reload cycles

Potential failure points:

- Typo in IP address → nodes can't reach SMS

- Wrong interface name → DHCP binds to wrong interface

- Missing firewall rule → TFTP timeouts

- Incorrect file permissions → service won't start

- SELinux blocking operations → mysterious failures

- Munge key mismatch → authentication failures

- Wrong service startup order → controller crashes

And this is just one SMS server. Production clusters need redundancy, which means doing all of this again, keeping configurations synchronized, and managing configuration drift over time. Six months from now when you need to build another cluster, you'll either have meticulous documentation or you'll be starting from scratch.

This approach works. And to be candid, thousands of clusters run this way successfully. But "works" and "scales elegantly" are different things.

What's next

Your SMS is configured using the traditional, battle-tested OpenHPC approach. You understand exactly what's involved in manual cluster deployment. You've edited the config files, managed the services, and handled the complexity.

In Post 2b, we're going to approach the exact same challenge using modern tools. You'll see how Warewulf's evolution and next-generation cluster orchestration platforms transform this multi-hour manual process into something dramatically simpler. We'll cover the same ground—SMS configuration, service setup, and cluster preparation—but with declarative configuration, web-based management, and automated validation.

After experiencing the traditional approach firsthand, you'll understand why administrators are adopting modern alternatives. Not because the old way doesn't work, but because cluster management should be engineering, not art.

This is Part 2a of a 7-part series (published as 6 posts) on deploying OpenHPC clusters with Rocky Linux. ← Back to Part 1 | Continue to Part 2b: The Warewulf Pro way →

Built for Scale. Chosen by the World’s Best.

1.4M+

Rocky Linux instances

Being used world wide

90%

Of fortune 100 companies

Use CIQ supported technologies

250k

Avg. monthly downloads

Rocky Linux